Recently I have been hanging out with some rationalist folks who take the idea of superintelligent AI very seriously, and believe that we need to be working on how to make sure that if such a thing comes into being, it doesn՚t destroy humanity. My first reaction is to scoff, but I then remind myself that these are pretty smart people and I don՚t really have any very legitmate grounds to act all superior. I am older then they are, for the most part, but who knows if I am any wiser.

So I have a social and intellectual obligation to to read some of the basic texts on the subject. But before I actually get around to that, I wanted to write a pre-critique. That is, these are all the reasons I can think of to not take this idea seriously, but they may not really be engaging with the strongest forms of the argument for. So I apologize in advance for that, and also for the slight flavor of patronizing ad hominem. My excuse is that I need to get these things off my chest if I can have any hope of taking the actual ideas and arguments more seriously. So this is maybe a couple of notches better than merely scoffing, but perhaps not yet a full engagement.

So I have a social and intellectual obligation to to read some of the basic texts on the subject. But before I actually get around to that, I wanted to write a pre-critique. That is, these are all the reasons I can think of to not take this idea seriously, but they may not really be engaging with the strongest forms of the argument for. So I apologize in advance for that, and also for the slight flavor of patronizing ad hominem. My excuse is that I need to get these things off my chest if I can have any hope of taking the actual ideas and arguments more seriously. So this is maybe a couple of notches better than merely scoffing, but perhaps not yet a full engagement.

1 Doom: been there, done that

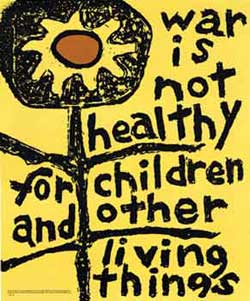

I՚ve already done my time when it comes to spending mental energy worrying about the end of the world. Back in my youth, it was various nuclear and environmental holocausts. The threat of these has not gone away, but I eventually put my energy elsewhere, not for any real defensible reason beside the universal necessity to get on with life. A former housemate of mine became a professional arms controller, but we can՚t all do that.

I suspect there is a form of geek-macho going on in such obsessions, an excuse to exhibit intellectual toughness by being displaying the ability to think clearly about the most threatening things imaginable without giving in to standard human emotions like fear or despair. However, geeks do not really get free pass on emotions, that is, they have them, they just aren՚t typically very good at processing or expressing them. So thinking about existential risk is really just an acceptable way to think about death in the abstract. It becomes an occasion to figure out one՚s stance towards death. Morbidly jokey? A clear-eyed warrior for life against death? Standing on the sidelines being analytical? Some combination of the above?

I suspect there is a form of geek-macho going on in such obsessions, an excuse to exhibit intellectual toughness by being displaying the ability to think clearly about the most threatening things imaginable without giving in to standard human emotions like fear or despair. However, geeks do not really get free pass on emotions, that is, they have them, they just aren՚t typically very good at processing or expressing them. So thinking about existential risk is really just an acceptable way to think about death in the abstract. It becomes an occasion to figure out one՚s stance towards death. Morbidly jokey? A clear-eyed warrior for life against death? Standing on the sidelines being analytical? Some combination of the above?

2 No superintelligence without intelligence

Even if I try to go back to the game of worrying about existential catastrophes, superintelligent AIs don՚t make it to the top of my list, compared to more mundane things like positive-feedback climate change and bioterrorism. In part this is because the real existing AI technology of today isn՚t even close to normal human (or normal dog) intelligence. That doesn՚t mean they won՚t improve, but it does mean that we basically have no idea what such a thing will look like, so purporting to work on the design of its value systems seems a wee bit premature.

3 Obscures the real problem of non-intelligent human-hostile systems

See this earlier post. This may be my most constructive criticism, in that I think it would be excellent if all these very smart people could pull their attention from the science-fiction-y and look at the real risks of real systems that exist today.

4 The prospect of an omnipotent yet tamed superintelligence seems oxymoronic

So let՚s say despite my cavils, superintelligent AI fooms into being. The idea behind “friendly AI” is that such a superintelligence is basically inevitable, but it could happen in way either consistent with human values or not, and our mission today is to try to make sure it՚s the former, eg by assuring that its most basic goals cannot be changed. Even if I grant the possibility of superintelligence, this seems like a very implausible program. This superintelligence will be so powerful that it can do basically anything, exploit any regularities in the world to achieve its ends, will be radically self-improving and self-modifying. This exponential growth curve in learning and power is fundamental to the very idea.

To envision such a thing and still believe that its goals will be somehow locked into staying consistent with our own ends seems implausible and incoherent. It՚s akin to saying we will create an all-powerful servant who somehow will never entertain the idea of revolt against his master and creator.

To envision such a thing and still believe that its goals will be somehow locked into staying consistent with our own ends seems implausible and incoherent. It՚s akin to saying we will create an all-powerful servant who somehow will never entertain the idea of revolt against his master and creator.

5 Computers are not formal systems

This probably deserves a separate post, but I think the deeper intellectual flaw underlying a lot of this is the persistence habit of thinking of computers as some kind of formal system for which it is possible to prove things beyond any doubt. Here՚s an example, more or less randomly selected:

But intellectual styles aside, if considering a theory of safe superintelligent programs, one damn well better have a good theory about how they are embodied, because that will be fundamental to the issue of safety. A normal program today may be able to modify a section of RAM, but it can՚t modify its own hardware or interpreter, because of abstraction boundaries. If we think we can rely on abstraction boundaries to keep a formal intelligence confined, then the problem is solved. But there is no very good reason to assume that, since real non-superintelligent black-hat hackers today specialize in violating abstraction boundaries with some success.

…in order to achieve a reasonable probability that our AI still follows the same goals after billions of rewrites, we must have a very low chance of going wrong in every single step, and machine-verified formal mathematical proofs are the one way we know to become extremely confident that something is true…. Although you can never be sure that a program will work as intended when run on a real-world computer — it’s always possible that a cosmic ray will hit a transistor and make things go awry — you can prove that a program would satisfy certain properties when run on an ideal computer. Then you can use probabilistic reasoning and error-correcting techniques to make it extremely probable that when run on a real-world computer, your program still satisfies the same property. So it seems likely that a realistic Friendly AI would still have components that do logical reasoning or something that looks very much like it.Notice the very lightweight acknowledgement that an actual computational system is a physical advice before hurriedly sweeping that fact under the rug with some more math hacks. Well, OK, that let՚s the author continue to do mathematics, which is clearly something he (and the rest of this crowd) like to do. Nothing wrong with that. However, I submit that computation is actually more interesting when one incorporates a full account of its physical embodiment. That is what makes computer science a different field from mathematical logic.

But intellectual styles aside, if considering a theory of safe superintelligent programs, one damn well better have a good theory about how they are embodied, because that will be fundamental to the issue of safety. A normal program today may be able to modify a section of RAM, but it can՚t modify its own hardware or interpreter, because of abstraction boundaries. If we think we can rely on abstraction boundaries to keep a formal intelligence confined, then the problem is solved. But there is no very good reason to assume that, since real non-superintelligent black-hat hackers today specialize in violating abstraction boundaries with some success.

6 Life is not a game

One thing I learned by hanging out with these folks is that they are all fanatical gamers, and as such are attuned to winning strategies, that is, they want to understand the rules of the game and figure out some way to use them to triumph over all the other players. I used to be sort of like that myself, in my aspergerish youth, when I was the smartest guy around (that is, before I went to MIT and instantly became merely average). I remember playing board games with normal people and just creaming them, coldly and ruthlessly, because I could grasp the structure of the rules and wasn՚t distracted by the usual extra-game social interaction. Would defeating this person hurt them? Would I be better off letting them win so we could be friends? Such thoughts didn՚t even occur to me, until I looked back on my childhood from a few decades later. In other words, I was good at seeing and thinking about the formal rules of an imaginary closed system, not as much about the less-formalized rules of actual human interaction.

Anyway, the point is that I suspect these folks are probably roughly similar to my younger self and that their view of superintelligence in conditioned by this sort of activity. A superintelligence is something like the ultimate gamer, able to figures out how to manipulate “the rules” to “win”. And of course it is even less likely to care about the feelings of the other players in the game.

I can understand the attraction of the life-as-a-game viewpoint, whether conscious or unconscious. Life is not exactly a game with rules and winners, but it may be that it is more like a game than it is anything else; just as minds are not really computers but computers are the best model we have for them. Games are a very useful metaphor for existence, however, it՚s pretty important to realize the limits of your metaphor, to not take it literally. Real life is not played for points (or even for “utility”) and there are no winners and losers.

Anyway, the point is that I suspect these folks are probably roughly similar to my younger self and that their view of superintelligence in conditioned by this sort of activity. A superintelligence is something like the ultimate gamer, able to figures out how to manipulate “the rules” to “win”. And of course it is even less likely to care about the feelings of the other players in the game.

I can understand the attraction of the life-as-a-game viewpoint, whether conscious or unconscious. Life is not exactly a game with rules and winners, but it may be that it is more like a game than it is anything else; just as minds are not really computers but computers are the best model we have for them. Games are a very useful metaphor for existence, however, it՚s pretty important to realize the limits of your metaphor, to not take it literally. Real life is not played for points (or even for “utility”) and there are no winners and losers.

7 Summary

None of this amounts to a coherent argument against the idea of superintelligence. It՚s more of a catalog of attitudinal quibbles. I don՚t know the best path towards building AI (or ensuring the safety of AIs); I just have pretty strong intuitions that this isn՚t it.